Self Host a Private, Enterprise-Grade AI Assistant on Azure Container Instances

A step-by-step guide to deploying a secure, customizable conversational AI solution on Azure using the open source software AnythingLLM.

Generative AI is transforming every industry, but most interactions still occur through proprietary SaaS solutions running on third-party infrastructure, offering limited customization, and no control over policies and governance.

If you require strict data privacy, full control over model choice, or the freedom to experiment, self-hosting is the logical next step.

The open-source community offers a wealth of free, production-grade solutions, with tens of thousands of stars on GitHub, such as LibreChat, Open WebUI, and AnythingLLM. These solutions support both local SLMs and cloud-based LLMs and offer various installation options ranging from native Windows desktop applications to Linux server VMs and Docker deployments.

This post shows how to deploy the open-source AnythingLLM application on Azure Container Instances (ACI) combining enterprise-grade security with the agility of serverless containers.

Why Run Your Own AI Assistant?

Running a generative AI solution on your own cloud infrastructure is a strategic choice for anyone who values privacy, control, and customization. Here’s why:

Data Privacy & Security: Unlike SaaS chatbots that process and store data externally, a private deployment keeps sensitive information within your environment on a trusted HyperScaler, backed by robust compliance and regulatory standards.

Ownership & Compliance: You control where your data resides, how it’s processed, and who accesses it, crucial for regulated industries like healthcare, finance, and legal. Azure’s enterprise-grade security and compliance help align with organizational policies.

Customization: Customized/private chatbots let you integrate your own documents, connect to any LLM, and tailor workflows with built-in agents and MCP support, no vendor lock-in.

Cost & Flexibility: Pay only for the compute you use with ACI and for LLM usage based on consumption of tokens.

Seamless Integration: Connect directly with internal systems and databases for richer, more context-aware interactions, capabilities not possible with generic external bots.

Picking among one of the most popular GitHub projects and self-hosting gives you privacy, compliance, flexibility, and full control.

Why Azure Container Instances?

Azure Container Instances (ACI) provide a fast and straightforward method for running containers in the cloud without the overhead of managing virtual machines or orchestrators like Kubernetes. Applications can be deployed in seconds, with support for pulling container images from Docker Hub or Azure Container Registry. Persistent storage can be mounted using Azure Files, and resource allocation can be precisely defined for CPU, memory, and GPU requirements.

ACI manages all underlying infrastructure, removing the need for manual server maintenance or patching. Public IP addresses and DNS labels can be assigned to containers, enabling direct internet access when required. Containers operate in secure, isolated environments, and integration with Azure Virtual Networks allows for secure communication with internal resources and other Azure services.

Both Linux and Windows containers are supported. With pay-as-you-go pricing, users are billed only for the resources consumed. This makes ACI suitable for deploying and managing cloud-native applications such as AnythingLLM with minimal operational overhead

What is AnythingLLM?

AnythingLLM is a full-stack application where you can use commercial LLMs or popular open source LLMs and various VectorDB solutions to build a private ChatGPT with no compromises, that you can run locally as well as host remotely.

Retrieval-Augmented Generation (RAG) is supported out of the box, allowing large language models to use and reference your own data. You can chat intelligently with your documents eliminating hallucinations because all generative AI interactions are grounded in your data.

AnythingLLM supports AI agents for tasks like web browsing, organizes work into workspaces, and implements the Model Context Protocol (MCP) for standardized integration with other tools. Multi-user support and role-based permissions are available in the Docker version, making it suitable for team or organizational use.

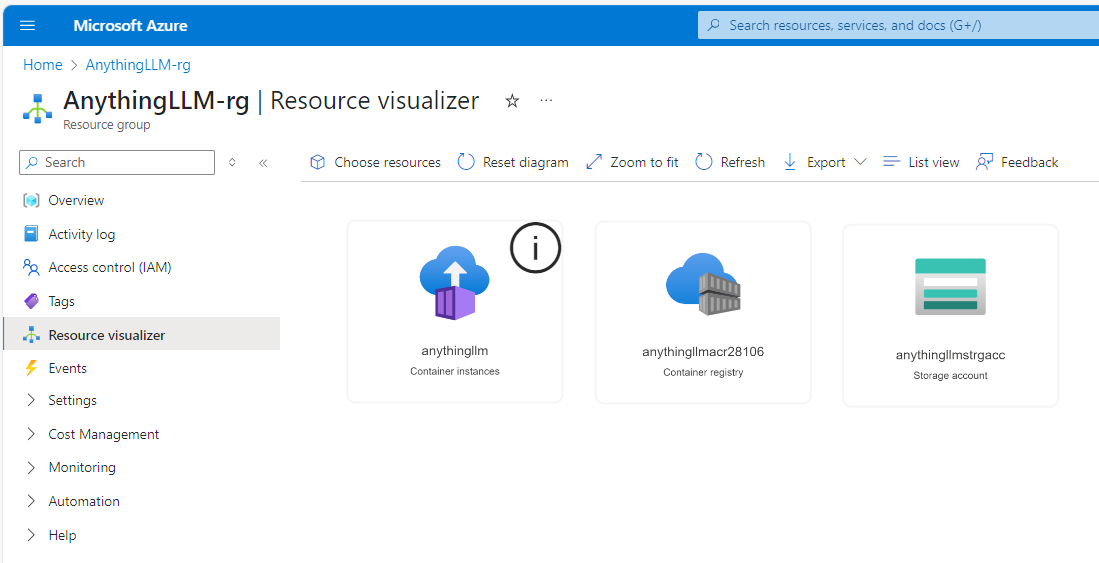

Deployment on Azure Container Instances

Let’s walk through the process of deploying AnythingLLM on Azure, using ACI and ACR for a clean, cloud-native setup. This approach gives you persistent storage, private image hosting, and a public endpoint for your chatbot. Instructions are tested on WSL2 Windows Terminal and the latest version of Azure CLI.

1. Set Your Variables

First, define your resource names and configuration. This makes the rest of the process repeatable and easy to tweak.

RESOURCE_GROUP="AnythingLLM-rg"

LOCATION="swedencentral"

STORAGE_ACCOUNT="anythingllmstrgacc"

FILE_SHARE="anythingllmstorage-fileshare"

CONTAINER_NAME="anythingllm"

DNS_LABEL="anythingllmacidpl"

ACI_IMAGE="anythingllm:latest"

ACI_CPU=2

ACI_MEMORY=8

ACI_PORT=3001

MOUNT_PATH="/app/server/storage"

ENV_STORAGE_DIR="/app/server/storage"

ACR_NAME="anythingllmacr$RANDOM"2. Provision Azure Resources

You’ll need a resource group, a storage account, a file share (for persistent storage), and a container registry to host your image.

az group create --name "$RESOURCE_GROUP" --location "$LOCATION"

az storage account create \

--resource-group "$RESOURCE_GROUP" \

--name "$STORAGE_ACCOUNT" \

--location "$LOCATION" \

--sku Standard_LRS \

--kind StorageV2

az storage share-rm create \

--resource-group "$RESOURCE_GROUP" \

--storage-account "$STORAGE_ACCOUNT" \

--name "$FILE_SHARE" \

--access-tier Hot \

--output none

az acr create --resource-group "$RESOURCE_GROUP" --name "$ACR_NAME" --sku Basic --location "$LOCATION"3. Import the AnythingLLM Image into Your Registry

Rather than pulling directly from Docker Hub, import the image into your own ACR. This is more secure and often faster.

az acr import \

--name "$ACR_NAME" \

--source docker.io/mintplexlabs/anythingllm:latest \

--image anythingllm:latest4. Get Storage and Registry Credentials

You’ll need the storage account key and ACR credentials for the container instance to access your resources.

STORAGE_KEY=$(az storage account keys list \

--resource-group "$RESOURCE_GROUP" \

--account-name "$STORAGE_ACCOUNT" \

--query "[0].value" --output tsv)

ACR_LOGIN_SERVER=$(az acr show --name "$ACR_NAME" --query "loginServer" --output tsv | tr -d '\r')

az acr update -n "$ACR_NAME" --admin-enabled true

ACR_USERNAME=$(az acr credential show --name "$ACR_NAME" --query "username" --output tsv)

ACR_PASSWORD=$(az acr credential show --name "$ACR_NAME" --query "passwords[0].value" --output tsv)5. Deploy the AnythingLLM Container

Now you’re ready to launch your container, mounting the Azure File Share for persistent storage and using your private image from ACR.

az container create \

--resource-group "$RESOURCE_GROUP" \

--name "$CONTAINER_NAME" \

--image "$ACR_LOGIN_SERVER/$ACI_IMAGE" \

--cpu "$ACI_CPU" --memory "$ACI_MEMORY" \

--os-type Linux \

--ports "$ACI_PORT" \

--ip-address Public \

--dns-name-label "$DNS_LABEL" \

--azure-file-volume-share-name "$FILE_SHARE" \

--azure-file-volume-account-name "$STORAGE_ACCOUNT" \

--azure-file-volume-account-key "$STORAGE_KEY" \

--azure-file-volume-mount-path "$MOUNT_PATH" \

--environment-variables STORAGE_DIR="$ENV_STORAGE_DIR" \

--registry-login-server "$ACR_LOGIN_SERVER" \

--registry-username "$ACR_USERNAME" \

--registry-password "$ACR_PASSWORD" \

--restart-policy Always6. Check Deployment and Logs

Once deployed, you can check the status and view logs to make sure everything is running smoothly.

az container show \

--resource-group "$RESOURCE_GROUP" \

--name "$CONTAINER_NAME" \

--query "{FQDN:ipAddress.fqdn,IP:ipAddress.ip,State:provisioningState}" --output table

az container logs --resource-group "$RESOURCE_GROUP" --name "$CONTAINER_NAME"If you need to debug or clean up, you can attach to the container’s output or delete the instance with

az container attach --resource-group "$RESOURCE_GROUP" --name "$CONTAINER_NAME"

az container delete --resource-group "$RESOURCE_GROUP" --name "$CONTAINER_NAME" --yes7. Access Your AnythingLLM Instance

Your chatbot is now live at:

http://$DNS_LABEL.$LOCATION.azurecontainer.io:$ACI_PORTAfter completing the initial configuration wizard, check out the getting started tasks for a quick overview and onboarding.

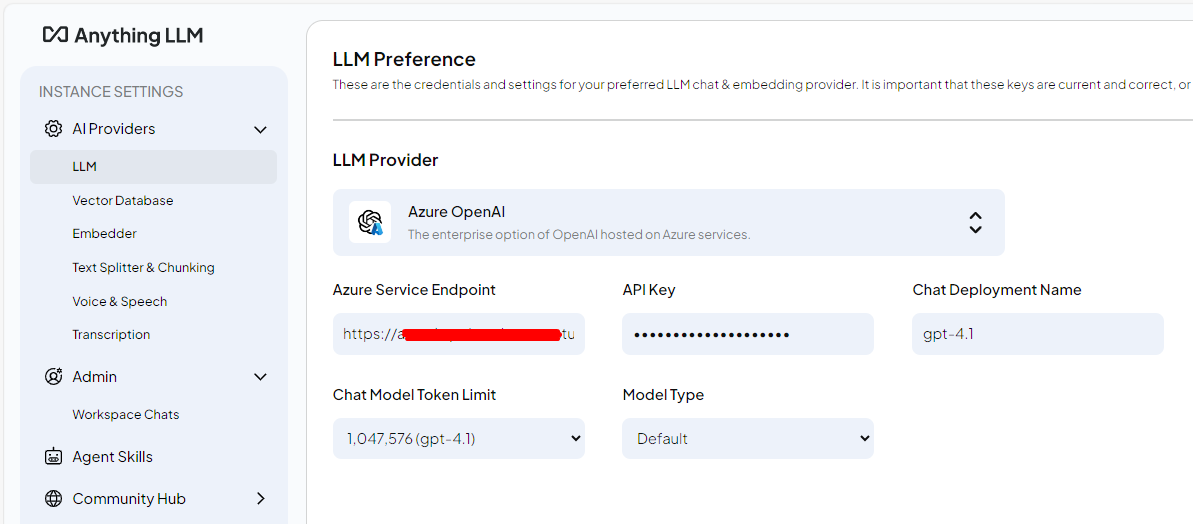

Azure OpenAI GPT-4.1 configuration

Your AI Assistant is only as good as the language model it uses. For most organizations, a frontier model like GPT-4.1 on Azure OpenAI offers the best balance of capability, reliability, and safety for day-to-day interactions, whether you’re answering questions, summarizing documents, or supporting internal workflows.

In the UI configuration section, select Azure OpenAI as your provider and enter your endpoint, API key, and the deployment name for GPT-4.1.

You can also set these values directly in your .env file, here’s an example configuration

LLM_PROVIDER='azure'

OPEN_MODEL_PREF='gpt-4.1'

AZURE_OPENAI_MODEL_TYPE='default'

AZURE_OPENAI_ENDPOINT='https://your-azureopenai-endpoint.openai.azure.com/'

AZURE_OPENAI_TOKEN_LIMIT='1047576'

AZURE_OPENAI_KEY='YOUR_AZURE_OPENAI_KEY'

EMBEDDING_ENGINE='azure'

EMBEDDING_MODEL_PREF='text-embedding-3-large'Wrapping Up

With this setup, you get the best of both worlds: the flexibility and privacy of self-hosting, and the power and convenience of cloud infrastructure, making it easy to bring your own documents, models, and workflows together.

For more details, check out the AnythingLLM documentation and the Azure Container Instances documentation.